Future-Proofing E-commerce: Implementing llms.txt to Secure AI Search Presence in 2026

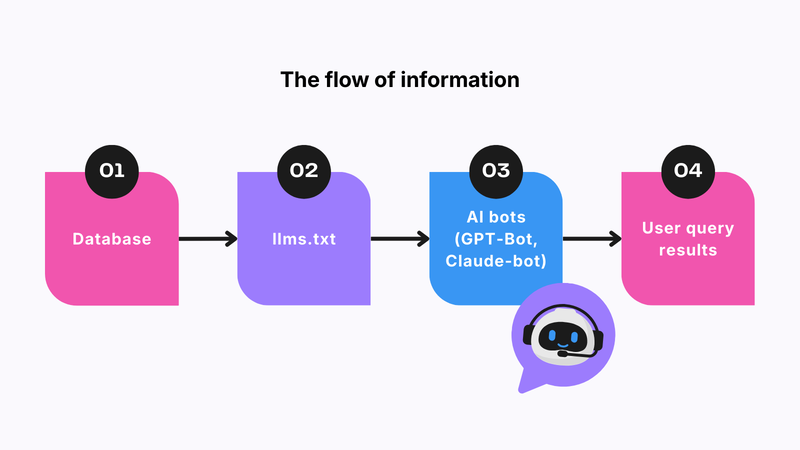

Netkodo implemented a custom llms.txt file for Scout & Nimble to transition from traditional SEO to Generative Engine Optimization (GEO). By providing a structured Markdown map of categories and an extensive FAQ, we ensured that AI models deliver factual brand data with zero impact on server performance.

Scout & Nimble operates in a premium interior design market where leadership in 2026 requires optimizing how a brand is perceived by artificial intelligence.

We proposed the llms.txt implementation as a proactive R&D initiative to ensure the client stays ahead of market trends.

Definition of llms.txt: A standardized text file (similar to robots.txt) that provides a condensed, Markdown-formatted version of a website’s content specifically designed for Large Language Model (LLM) crawlers to read and understand.

The primary goal is Generative Engine Optimization (GEO): ensuring AI models rely on verified brand facts rather than probabilistic hallucinations or guesswork.

In summary: we addressed the risk of AI guessing (hallucinations) by providing a factual instruction manual for the brand’s categories and sales rules.

We developed a custom technical solution tailored to the Scout & Nimble store's architecture.

| Parameter |

Netkodo Custom Solution |

Standard Plugin Approach |

|---|---|---|

| Generation Type | Static (generated once per 24 hours) | Often dynamic (generated per request) |

| Server Load | Zero during bot crawling | Potential spikes during intense scraping |

| Data Source | Production database | Often delayed via PIM/ERP sync |

| Format | Clean Markdown hierarchy | Often cluttered or unstructured |

Micro-example: Why pull from the database? PIM/ERP systems often contain technical noise (irrelevant for AI) and synchronization delays. By pulling directly from the production database, the AI navigates the same live category tree seen by human users, eliminating the risk of it suggesting disabled categories.

Instead of a raw list of products, we provided a structured topic hub.

Tip for developers: effective AI integration depends on providing a clear map and rules of the game rather than exhaustive raw data. Focus on the FAQ and structure; deliver a clear map (categories) and rules of the game (FAQ), and the AI will take care of the rest.

The implementation's effectiveness was verified by entering the file URL into ChatGPT, Claude, and Perplexity.

The deployment for Scout & Nimble demonstrates that effective AI integration in e-commerce depends on providing a clear map and rules rather than exhaustive raw data.

Netkodo’s approach prioritizes technical stability and business logic, ensuring that as AI standards evolve, the brand’s data remains accessible and accurately interpreted by GPT-Bot, Claude-bot, and other leading crawlers.

An llms.txt file is a standardized, machine-readable text file (typically in Markdown format) located in a website's root directory. Similar to how robots.txt provides instructions for search engine crawlers, llms.txt provides a condensed instruction manual for Large Language Models (LLMs). It serves as a primary source of truth, offering a clear map of the site’s category hierarchy, key business rules, and high-value data to ensure AI models interpret the brand accurately.

In the context of Artificial Intelligence, a hallucination occurs when a model generates information that sounds confident and fluent but is factually incorrect or unsupported by real-world data. For an e-commerce brand like Scout & Nimble, this could manifest as an AI incorrectly claiming a product is in stock, fabricating a return policy, or guessing shipping costs based on general patterns rather than the store's specific rules. The llms.txt file is specifically designed to eliminate these hallucinations by grounding the AI’s responses in verified, database-driven facts.

Models have a context window, which limits how much information they can process at a time. If a developer attempts to list every product in the llms.txt file, the model may exceed the limit and stop reading, causing it to ignore critical sections such as the FAQ or legal policies. By focusing on product hubs and logical category structures, the file remains within these limits, ensuring the most important business rules are always prioritized and understood.

The main objective is Generative Engine Optimization (GEO). By providing a machine-readable instruction manual, Netkodo ensures that AI models rely on verified brand facts and sales rules rather than probability-based assumptions or hallucinations when answering user queries about the brand.

A static file, generated every 24 hours, was selected to ensure optimal performance and security. This approach prevents AI bots from putting any additional load on the production server, ensuring a fast experience for human users while providing the bots with a ready-to-read text file.

We made a strategic decision to exclude thousands of individual product links because AI model context windows have operational limits. Over-saturating the file could cause the AI to stop reading halfway through, potentially missing critical high-value information such as return policies or brand FAQs.

The system is fully scalable because it operates on a logical category tree rather than an itemized list. If Scout & Nimble doubles its inventory, the AI will still follow the same stable category map and product hubs, preventing the llms.txt file from becoming overly complex or clogged for the AI to process.

The selection focused on the most frequent questions users ask LLMs, including:

The file pulls data directly from the store’s production database, which serves as the source of truth for the frontend. This ensures the AI sees the exact same category hierarchy and blog content as the user, avoiding the synchronization delays or technical noise often found in PIM or ERP systems.

The file was tested by submitting the URL directly to models like ChatGPT, Claude, and Perplexity. The testing confirmed that the models immediately abandoned improvisation and adapted their answers to match the specific guidelines provided in the file.

Netkodo utilized a modified version of its proprietary, stable script used for sitemap.xml and robots.txt. This provided full control over the Markdown hierarchy and avoided the bloat and unnecessary features often found in third-party plugins.

The most critical rule is to avoid trying to trick the AI by over-stuffing the file. Focus on a clean category structure and a reliable FAQ; if you provide a good map and clear rules of the game, the AI is intelligent enough to navigate the rest of the store effectively.

We anticipate that within a year, llms.txt will be as standard as sitemap.xml. While the sitemap is for traditional search engines, the llms.txt serves as the fundamental infrastructure for AI-assisted shopping and generative search.